How to Prevent Memory Bloat in Mongo

Feed Mongo!!

Several months ago at Yipit, we decided to cross the NoSQL rubicon and port a large portion of our data storage from MySQL over to MongoDB.

One of the main drivers behind our move to Mongo was the composition of our data (namely, our recommendation engine system) which consists of loosely structured, denormalized objects best represented as a JSON-style documents. Here’s an example of a typical recommendation object.

How Key Expansion Cause Memory Bloat

Because any given recommendation can have a number of arbitrary nested attributes, Mongo’s “schemaless” style is much preferred to the fixed schema approach imposed by a relational database.

The downside here, though, is that this structure produces extreme data duplication. Whereas a MySQL column is stored only once for a given table, an equivalent JSON attribute is repeated for each document in a collection.

Why Memory Management in Mongo is Crucial

When your data set is sufficiently small, this redundancy is usually acceptable; however, once you begin to scale up, it becomes less palatable. At Yipit, an average key size of 100 Bytes per document, spread over roughly 65 million documents, adds somewhere between 7GB-10GB of data (factoring in indexes) without providing much value at all.

Mongo is so awesome, on good days, because it maps data files to memory. Memory based reads and writes are super fast. On the other hand, Mongo is absolutely not awesome once your working data set surpasses the memory capacity of the underlying box. At that point, painful page faults and locking issues ensue. Worse yet, if your indexes begin grow too large to remain in memory, you are in trouble (seriously, don’t let that happen).

Quick Tips on Memory Management

You can get around this memory problem in a number of ways. Here’s a non-exhaustive list of options:

Add higher memory machines or more shards if cash is not a major constraint (I would recommend the latter to minimize the scourge of write locks).

Actively utilize the “_id” key, instead of always storing the default ObjectID.

Use namespacing tricks for your collections. In other words, create separate collections for recommendations in different cities, rather than storing a city key within each collection document.

Embed documents rather than linking them implicitly in your code.

Store the right data types for your values (i.e. integers are more space efficient than strings)

Get creative about non-duplicative indexing on compound keys.

The Key Compression Approach

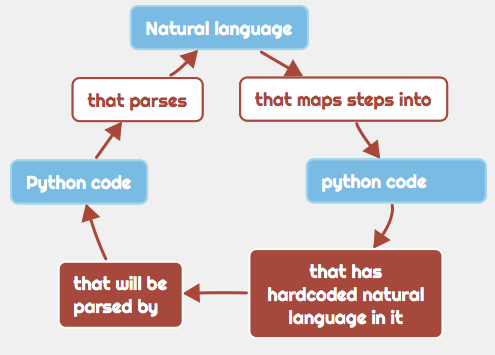

After you’ve checked off those options, you may still wish to cut down on stored key length. The easiest path here probably involves creating a translation table in your filesystem that compresses keys on the way to Mongo from your code and then decompresses during the return trip.

For simplicity sake, a developer could hardcode the translations, updating the table on schema changes. While that works, it would be nice if there were a Mongo ORM for Python that just handled it for us automatically. It just so happens that MongoEngine is a useful, Django style ORM on top of the PyMongo driver. Sadly, it does not handle key compression.

Automatic Compression Tool

As a weekend project, I thought that it would be cool to add this functionality. Here’s an initial crack at it (warning: it may not be production ready).

The docstrings and inline comments are fairly extensive, but I should repeat a couple of main points:

This logic adds some overhead to the process of defining a class. This happens only once, when the class is loaded, and quick benchmarking seems to suggest that it’s not overly prohibitive. That being said, I mention several ways of improving the efficiency of this code. First, you could move it directly into the TopLevelDocumentMetaclass or you could process the attrs before instantiating the class. Both would avoid the double work incurred here.

Embedded fields are not handled completely in this code. The first time you set an embedded document, the underlying fields will be compressed. However, if you change the nested fields subsequently but do not change the parent field, the nested fields will not be reset. This means that you’ll have an uncompressed key for each nested field that you change. You can get around this by dropping the mapped collection and recreating it (simple operation). I plan to handle this logic in the code shortly.

Indexing in the meta attribute of the class should work as expected, though I would generally suggest that you set indexes administratively as a best practice.

The Final Mapped Output

Here is a working example of the code (you’ll need to add an abstract class to make this work).

When you define the TrialDocument Class, this document will be created in a collection titled, “trial_document_mapping”.

If you were to then remove the judge field from the TrialDocument and add a reporter field, you’d get the following:

If you were to then go into the shell, you could interact with MongoEngine like this:

Success! We’ve got compressed keys. Just one thing before we go. Beyond key space optimization, this is also a quick primer for smart value storage. Never use long string field values like this if you can help it (we can definitely help it here by using integers).

Next Steps Ahead

Hope that’s interesting and (even better) useful. I’ll try to update this post once I’ve worked out all the kinks with embedded objects and sped up the class instantiation process.

Ben Plesser is a Developer at Yipit.

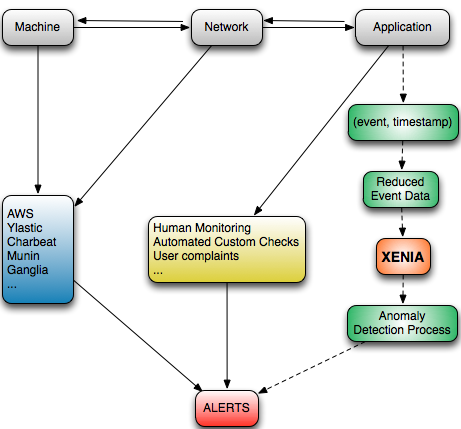

This allows us to be agile, to quickly innovate, and more importantly, to bounce back from any grave errors, unscathed.

This allows us to be agile, to quickly innovate, and more importantly, to bounce back from any grave errors, unscathed.